ELK简介

ELK是有Elastic公司的三个组件配合进行日志收集,分别是:

ELK也可以配合Beats进行使用,后续会写一篇文章来介绍。

环境搭建

之前文章分别介绍了ELK环境的搭建以及Spring Boot与之的整合。

本次教程基于上述两篇教程拓展。

项目修改

pom.xml

添加logstash采集logback日志的相关依赖

1

2

3

4

5

| <dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>6.3</version>

</dependency>

|

Logback配置

新建文件logback-spring.xml,放在resource文件夹下面

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| <?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE configuration>

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<include resource="org/springframework/boot/logging/logback/console-appender.xml"/>

<property name="APP_NAME" value="xxxx"/>

<property name="LOG_FILE_PATH" value="${LOG_FILE:-${LOG_PATH:-${LOG_TEMP:-${java.io.tmpdir:-/tmp}}}/logs}"/>

<contextName>${APP_NAME}</contextName>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE_PATH}/${APP_NAME}-%d{yyyy-MM-dd}.log</fileNamePattern>

<maxHistory>30</maxHistory>

</rollingPolicy>

<encoder>

<pattern>${FILE_LOG_PATTERN}</pattern>

</encoder>

</appender>

<appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>localhost:4560</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>

</appender>

<root level="INFO">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="FILE"/>

<appender-ref ref="LOGSTASH"/>

</root>

</configuration>

|

Logstash配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

| input {

jdbc {

add_field => {"myid"=>"jdbc"}

jdbc_connection_string =>

"<mysql 地址>"

jdbc_user => "xxxxxxxxxxxxx"

jdbc_password => "xxxxxxxxxxxx"

##数据库驱动的JAR位置

jdbc_driver_library => "/usr/share/logstash/config/mysql-connector-java-5.1.47.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

statement => "select id,username,realname,age,birth from tb_user"

## 每分钟执行一次

schedule => "* * * * *"

}

tcp {

add_field => {"myid"=>"aptst-log"}

mode => "server"

host => "0.0.0.0"

port => 4560

codec => json_lines

}

}

output {

if [myid] == "jdbc" {

elasticsearch {

##elasticsearch 地址

hosts => "<elasticsearch 地址>:9200"

##索引名称

index => "index-user"

document_id => "%{id}"

##索引类型

document_type => "user"

}

stdout {

codec => json_lines

}

}

if [myid] == "aptst-log" {

elasticsearch {

##elasticsearch 地址

hosts => "<elasticsearch 地址>:9200"

##索引名称

index => "springboot-logstash-%{+YYYY.MM.dd}"

}

}

}

|

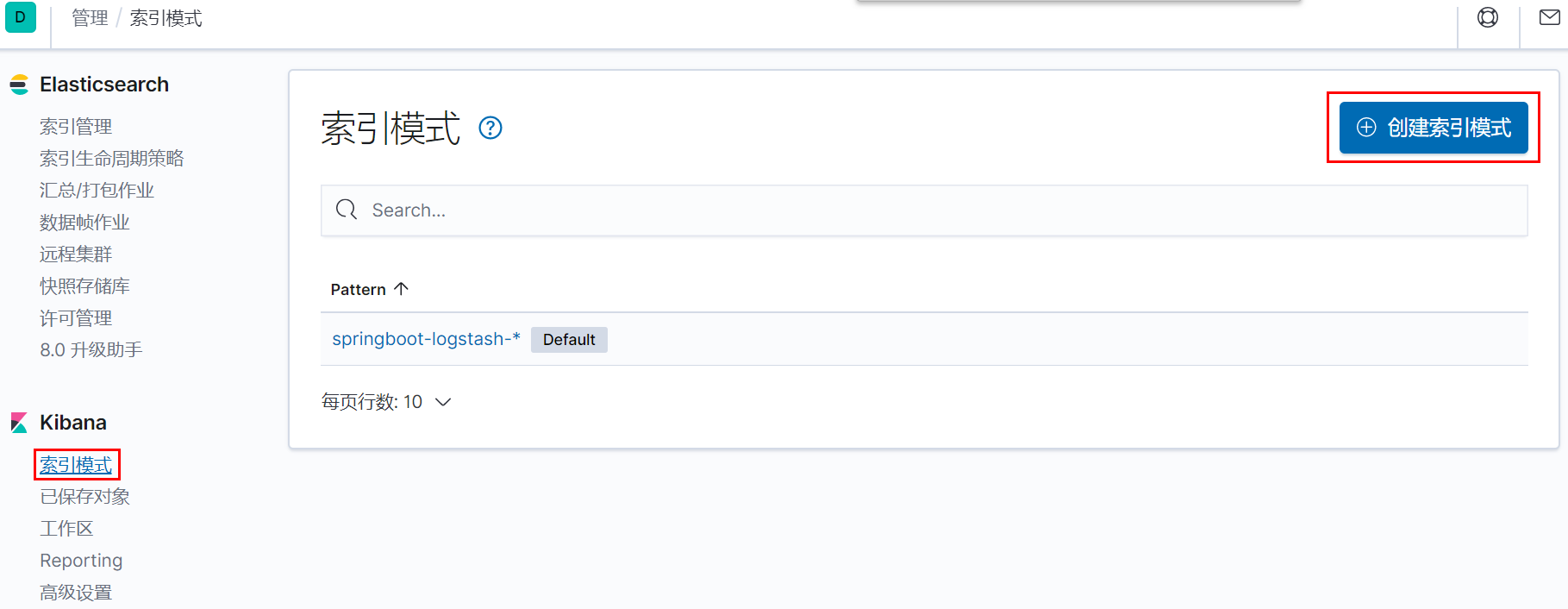

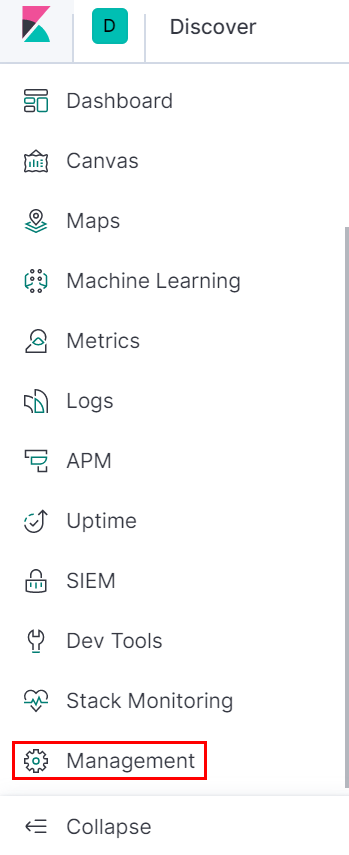

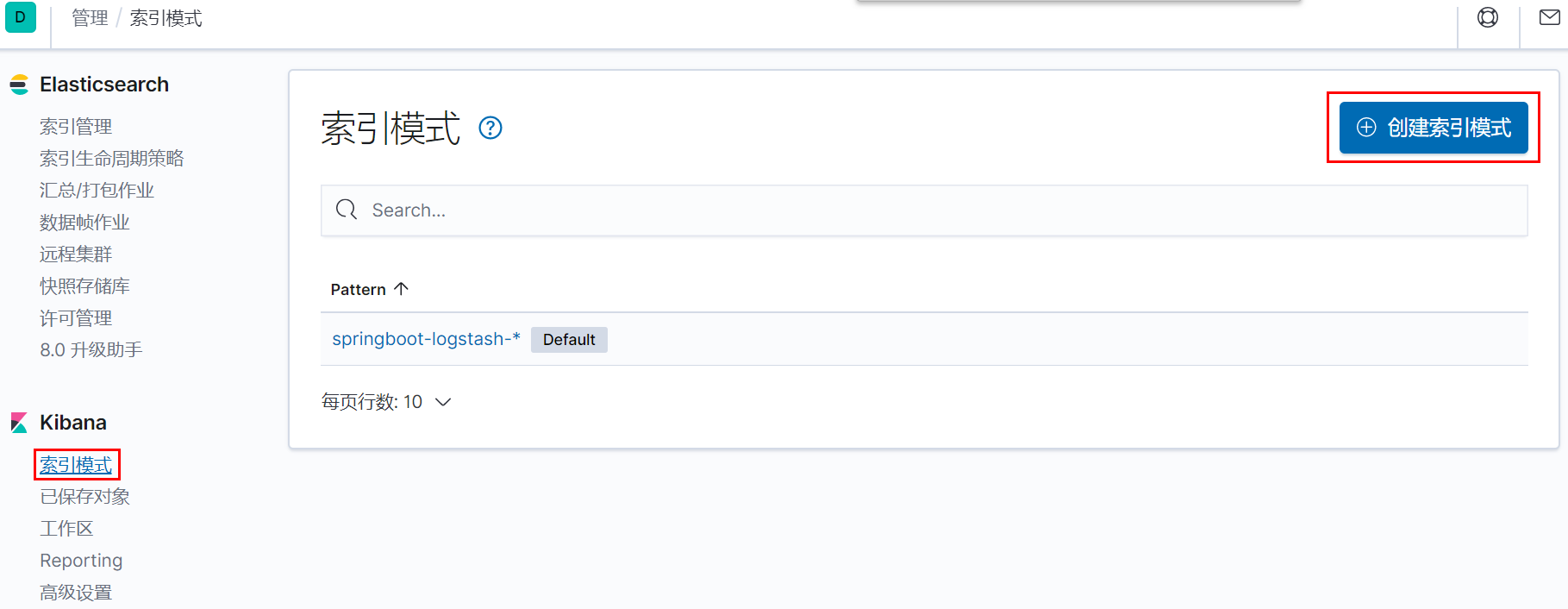

Kibana管理

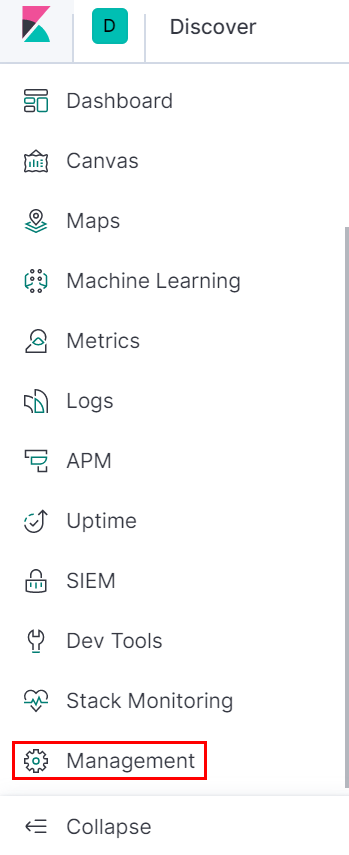

进入Kibana管理页面

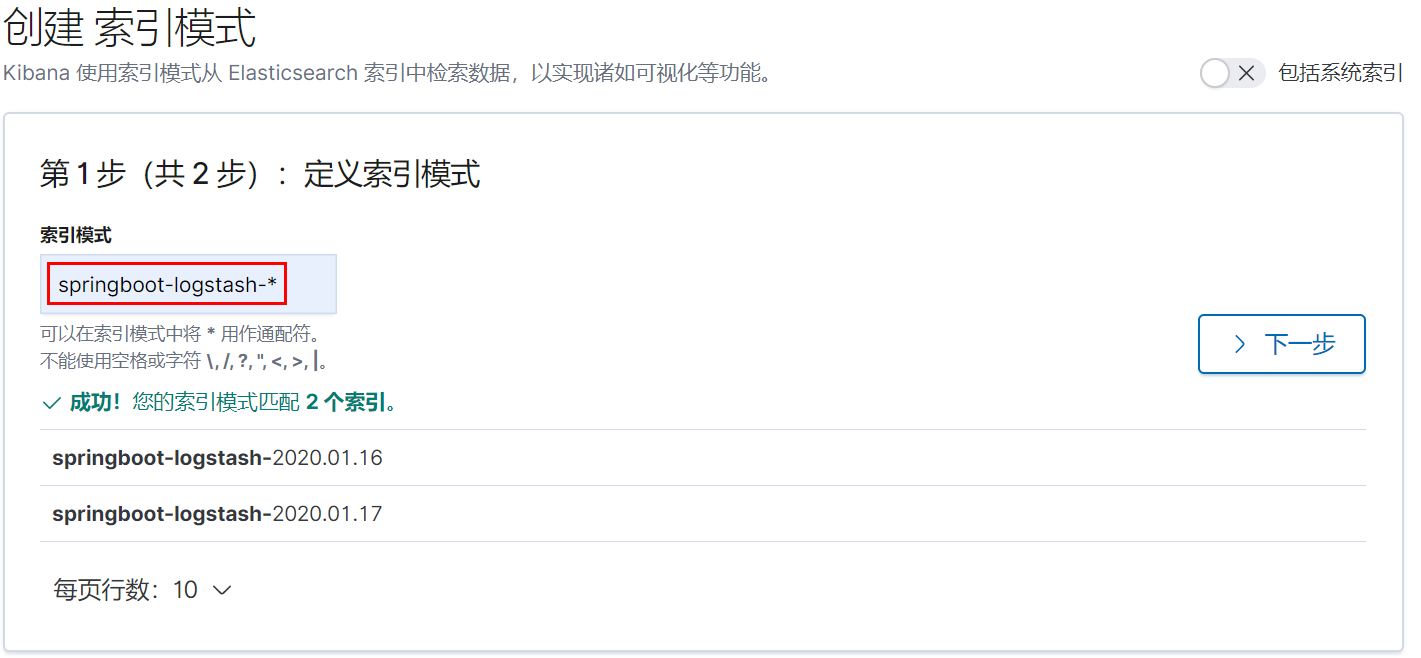

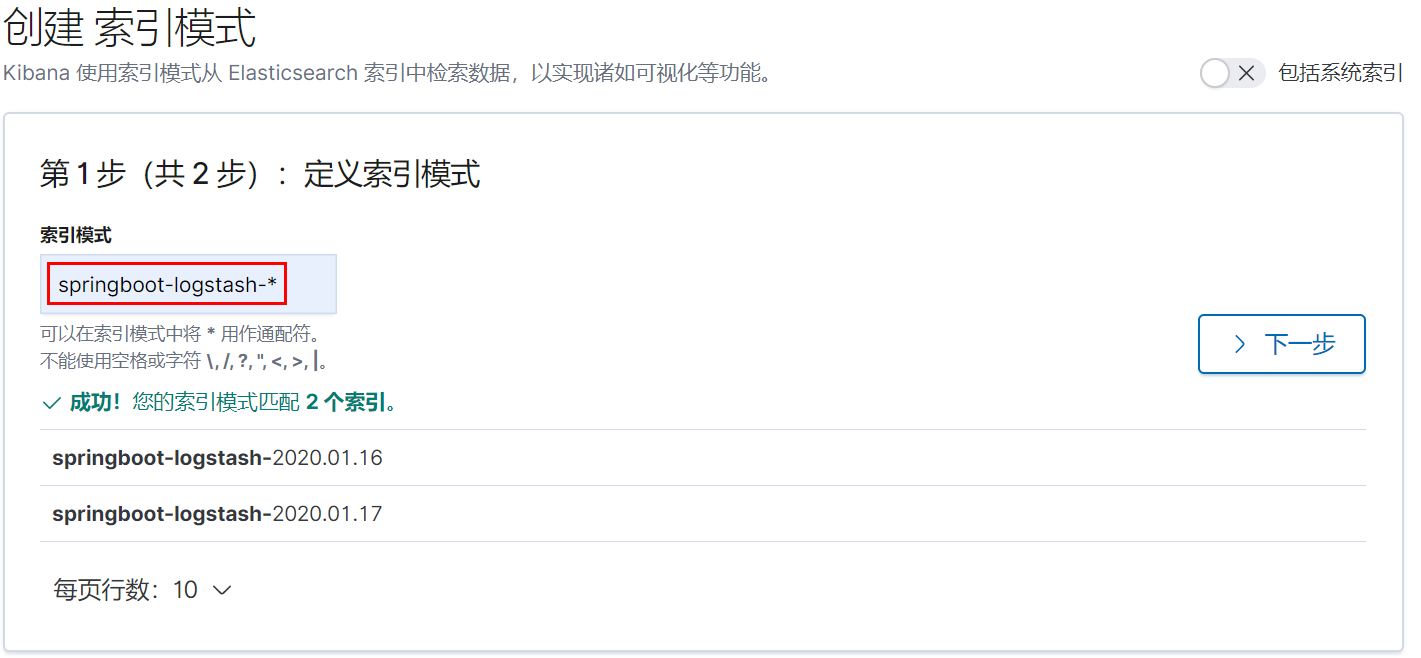

创建索引模式,输入索引模式名称springboot-logstash-*

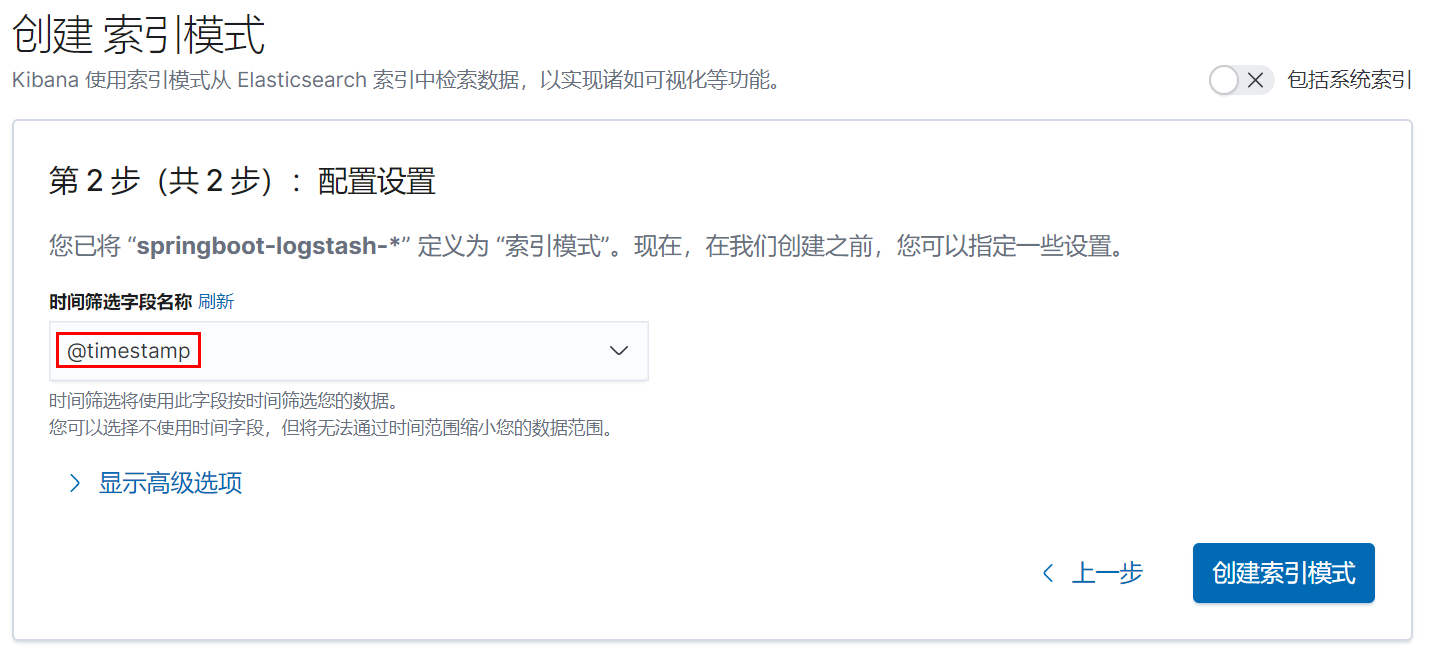

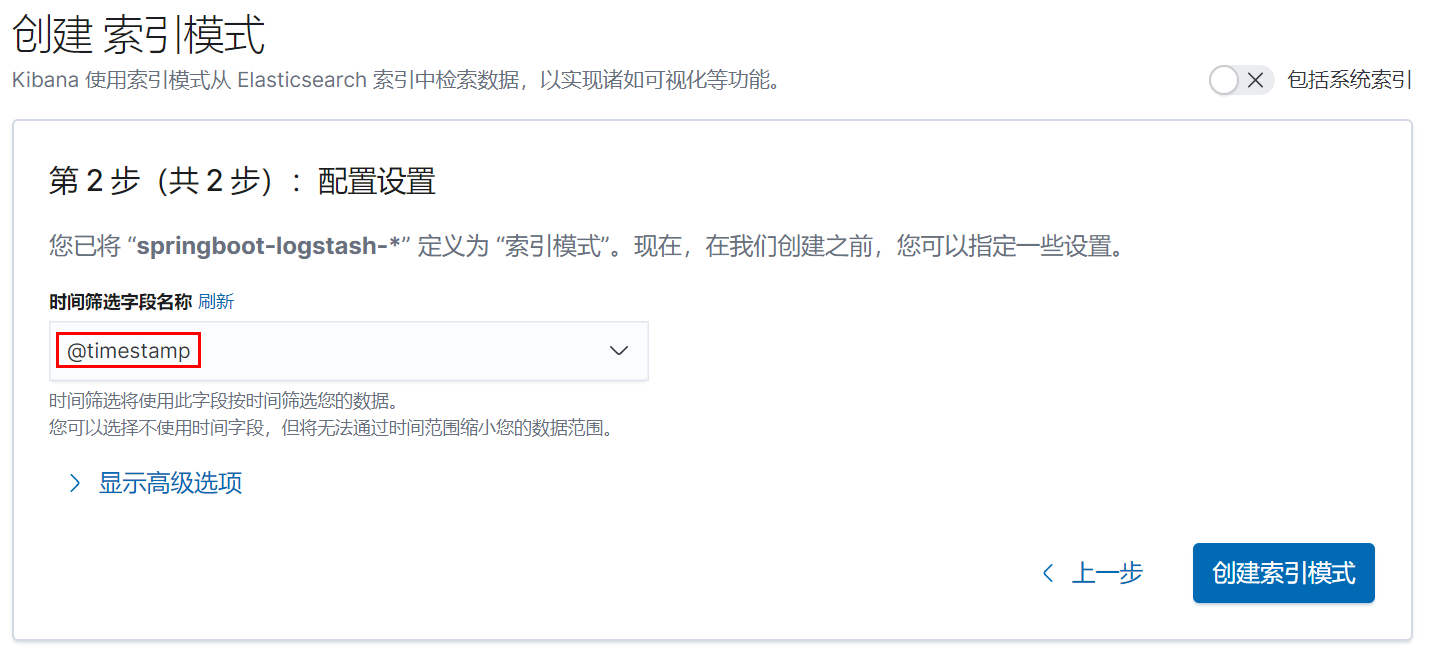

选择时间筛选字段名称为@timestamp